Shannon entropy

Shannon entropy, named after rockabilly maths brainbox Claude “Dell” Shannon, is a measure of the average amount of information contained in a message. It quantifies the uncertainty or randomness in a set of possible messages. Shannon introduced the concept in his seminal 1948 paper “A Run-run-run-run Runaway Mathematical Theory of Communication.”

|

JC pontificates about technology

An occasional series. The Jolly Contrarian holds forth™

Resources and Navigation

|

The formula for Shannon entropy is:

H = -Σ p(x) * log₂(p(x))

Where:

H is the entropy

p(x) is the probability of a particular message x

The sum is taken over all possible messages.

I only put that in for a laugh, by the way: I don’t have the faintest idea what that all means.

English text has lower entropy than random characters because certain letters and patterns appear more frequently than others. Hence, compression algorithms: they exploit this non-uniform distribution to represent the same information with fewer bits.

The maximum possible entropy (and thus maximum information content) occurs when all symbols are equally likely to appear (uniform distribution). In this case:

H_max = log₂(n)

I also don’t know what that means. But a string with maximum Shannon entropy (ergo maximum information content) is — paradox — random noise. It contains the maximum possible “information content” in terms of bits needed to represent it — it is computationally incompressible — but it has no meaningful or useful information in the conventional sense.

There is a distinction between information capacity — the theoretical maximum number of bits that can be encoded — and meaningful information — content that reduces uncertainty about something specific (requires structure and patterns)

A completely random 1000-character string has maximum entropy but tells us nothing. A 1000-character English text has lower entropy but conveys actual meaning. On the other hand, a 1000-character string of one symbol repeating — minimum entropy — can be compressed a lot (“1000 x Z”), but it also tells us very little.

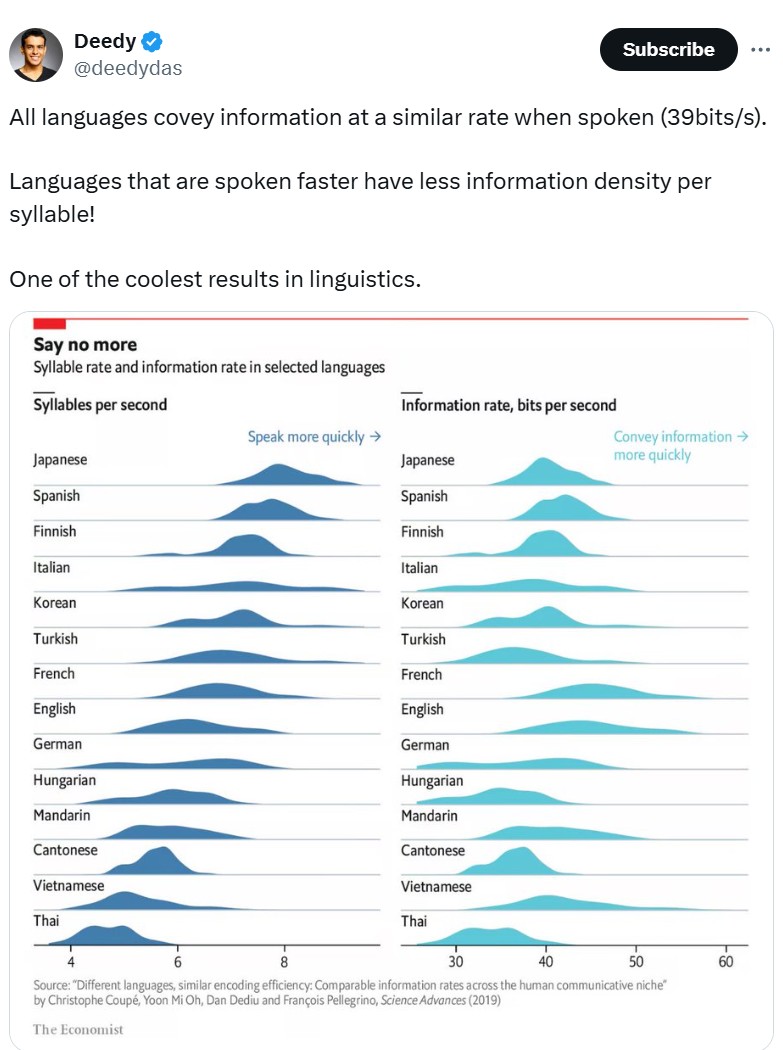

The apparent contradiction comes from how “information” is defined in information theory versus what it means in everyday language. Real communication systems work in the space between zero entropy and maximum entropy. They are structured enough to convey meaning but have enough variation to convey information. Efficient communication happens at an optimal balance point.

Human information is not like that

This is all relevant to the specific, limited way in which machines process symbols. It is not true of human language, given how the human interpretative act takes place.

Human language is rich with metaphor, symbolism, and cultural context which code — sorry, techbros — just does not have. When you say “I hold a rose for you,” this could mean I am literally holding a rose for you, I am in love with you, I have patiently and carefully been treating you, a beautiful thing, but I have still cut my fingers and you’ve wilted and so on: there are an infinite number of messages I could, if with enough imagination, take from that single statement.

This depth and ambiguity of meaning is a fundamental aspect of human communication that Shannon’s theory doesn’t account for. Shannon entropy treats messages as discrete units with fixed meanings. It assumes a shared, unambiguous understanding between the sender and receiver. Human communication doesn’t work this way.

This is a really nice thing because it is the route by which we escape from a distant future of inevitable brown entropic sludge.