Technological unemployment

“Any sufficiently advanced technology is indistinguishable from magic.”

|

JC pontificates about technology

An occasional series.

|

- —Arthur C. Clarke’s third law

One of the great dogmas.

As articulated by Keynes: “unemployment due to our discovery of means of economising the use of labour outrunning the pace at which we can find new uses for labour.”[1]. You would think that this can only ever be a temporary effect: the entrepreneurial possibilities created by freeing labour up from one occupation to do anything else must mean in the long run there can be no technological unemployment. History definitely tells us that. But — just try telling that to Daniel Susskind. This time is different.[2]

To the JCs’ simplistic way of looking at it, to believe the contrary is to be afflicted by, at least, a lack of imagination — and really, a lack of wisdom — the kind you can only get from the school of life. And, in any case, the whole edifice of technological development is founded on a different premise: that there is more than one way to skin a cat, and the history of technology is the accidental discovery of whole new ways of not just skinning old cats, but then figuring out what to do with the skins, and the cats.

The history of the world so far: we solve old problems, usually by accident. Old problem goes away and in its place we find a range up untapped, hitherto unimagined possibilities.

Machines aren’t awfully good at imagining hitherto unforeseeable possibilities, let alone figuring out how to exploit them. And no, being good at Go or Chess doesn’t falsify that observation.[3] Machines are good at doing what someone has figured out needs to be done, now, only faster. They require configuration, programming and implementation. Machines are faster horses. They won’t imagine an alternative future for you. Not even clever, artificially intelligent, seemingly magical machines.

We are in the middle of a Cambrian explosion of innovations. The one thing we can be assured won’t work right now are faster horses.

If there were only way you ever could do things, and we had already found it, you technologists, futurologists and millenarians can get your coats. But that’s plainly nonsense. Did DARPA, when it invested the internet, have Gangnam Style in mind? Did Apple, anticipate all the applications to which you could put an iPhone? Has the internet, or the smartphone iPhone destroyed, or created, commercial activity?

Technology certainly threatens those who seek to operationalise labour — who look to take the easy, algorithmic bits, that could and, really (if reg tech was any good), already should be done by robots — cheapen it and send it off to distant shores to processing by inexpensive young muppets[4] Because that is a transparently stupid strategy in the first place.

Operationalisation is the process of trying to render the cosmic mundane — it is to ask to be superseded by robots, as you drive your business model, and your margins, into the ground.

But, yet, yet, yet: one thing we know technology will do is lower the barriers to interaction and communication. And one thing we know that the great huddled masses of mercantile foot-soldiers like to do is talk — as much as possible, and about as little of moment as possible, in as elliptical a way as possible. Visit LinkedIn, or Twitter — hell, just listen to anything that comes out of the middle-management layer of any decent sized firm if you really need persuading of this.

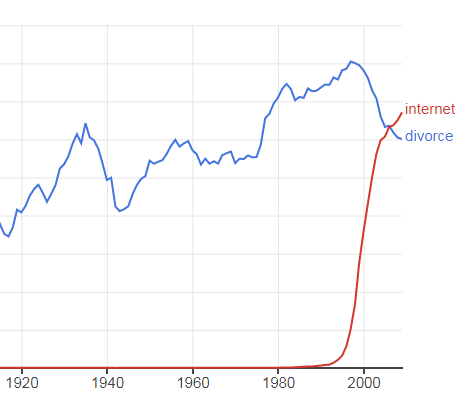

There is an equilibrium of sorts between the need to get stuff done and the need to vent your own opinions, and until that Berners-Lee fellow ruined everything, it was set quite delicately at a place where, for most of us, while achieving anything was hard, finding people to listen to your opinions was even harder, so we spent most of our time in morose silence slugging away at a hard rock-face with an old, soft-bristled, toothbrush. We had collected enough chips of slate to keep our employers happy and take a bit home to keep the hungry mouths around the Formica table passably filled with tinned foods. The only people around to hear our plaintive discursions about the ills of the modern world were those spouses and children, their mouths so crammed with baked beans as to be unable even to reply. The divorce rate was stratospheric.

Enter the internet, distributed networks, are tools are sharper but suddenly talk is cheap. As such, you get what you pay for: a lot of cheap talk fills up the workplace. If we haven’t enough of this quadrophonic noise by the time we come to clock out, we can vent the remains of our metaphysical angst into the howling, stone-deaf gale that is the world wide web, rather the well-bent ears of our long-suffering spouses and their bean-stuffed toe-rags. We sit at our tele-screens and watch our rage boil off, evaporating harmlessly into the infinite, thundering dark.

I’m getting a bit carried away, aren’t I. But not entirely: see left for what Google’s ngram viewer makes of the relationship between marital harmony and a fulfilled online life. But while Andy (and his successors) have been givething, it is not just Bill who has been takething away. The middle management layer has been doing its bit too, by — well, basically by inventing itself to occupy the space occupied by the crickets. And it we take it that the productivity tools came on line from the mid 1980s — personal computers, the fax, e-mail and then this glorious thing called the world wide web.

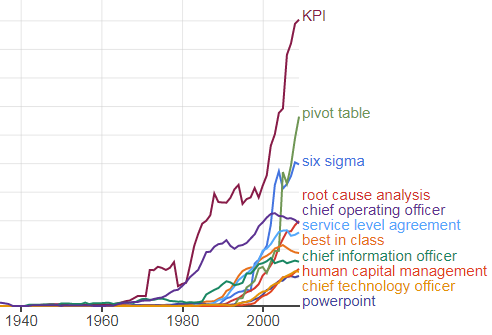

So, wouldn’t it be a gas to see when the middle management buzzwords started to come into the corpus? Well, fancy that.

See also

References

- ↑ Keynes: Economic Possibilities for our Grandchildren

- ↑ We say to him what we said a decade ago to Ray Kurzweil: “... it’s easy to be smug as I type on my decidedly physical computer, showing no signs of being superseded with VR Goggles just yet and we’re only six months from the new decade, [note: that was the last decade.] but, being as path-dependent as it is the evolutionary process is notoriously bad at making predictions — until the results are in.”

- ↑ Nassim Nicholas Taleb calls this the “ludic fallacy”. let me Google that for you.

- ↑ I mean no disrespect to said young muppets, only to their muppetmasters: their very strategy is to find cheap, uncomplaining operatives possessed of basic literacy and a pulse. They do not want bright young things, because bright young things get ideas.