Substrate: Difference between revisions

Amwelladmin (talk | contribs) No edit summary |

Amwelladmin (talk | contribs) No edit summary |

||

| Line 8: | Line 8: | ||

(Sub-thread: is physically printed material “[[tangible]]” information? Or just a [[tangible]] ''substrate'' in which information is embedded?) | (Sub-thread: is physically printed material “[[tangible]]” information? Or just a [[tangible]] ''substrate'' in which information is embedded?) | ||

====Substrate neutrality==== | |||

In his fascinating book {{br|Darwqin’s Dangerous Idea}} that popularising philosopher [[Daniel Dennett]] used “[[substrate neutrality]]” to describe how cognitive processes and mental phenomena can be understood independently of the physical medium (“substrate”) in which they occur. What matters is their ''functional'' organisation, not what they are made out of. Thus intelligence is not necessarily a case of requiring carbon and a fleshy cerebellum, but may be built out of silicon (or in the case of {{pl|https://www.ft.com/content/6f28a534-7eae-4762-9ab9-abef0ff00525|MONIAC}}, a hydraulic system) | |||

We should therefore focus on the functional and [[algorithm|algorithmic]] aspects of cognition rather than the specific biological implementation. This is Darwin’s Dangerous Idea, in a nutshell. This view supports the possibility of artificial intelligence and consciousness in non-biological systems. That seemed a bit outlandish in the 1990s, but in 2024, less so. | |||

Dennett uses this concept to argue for a computational theory of mind and against “mind-body dualism”. | |||

Dennett's world rather took the information technology crowd by storm, and the computational nature of a brain isin my view is somewhat overplayed. JC calls this [[robomorphism]]. This is not to deny there is anything ineffable and sacred about carbon and fleshy cerebellar, but rather to suggest that a cheering machine style algorithmic model is simplistic and does not fully capture how human cognition and discourse works. You could build a generally intelligent machine, that is to say, but you would not start from a [[symbol-processing]] Turing machine if you were to do that. | |||

{{sa}} | {{sa}} | ||

{{gb|[[Metadata]]<li>[[Tangible]]<li>[[Jacquard loom]]<li>[[Desktops, metadata and filing]]}} | {{gb|[[Metadata]]<li>[[Tangible]]<li>[[Jacquard loom]]<li>[[Desktops, metadata and filing]]<li>[[Turing machine]]<li>[[Robomorphism]]}} | ||

Revision as of 13:49, 14 October 2024

|

JC pontificates about technology

An occasional series.

|

Substrate

/ˈsʌbstreɪt/ (n.)

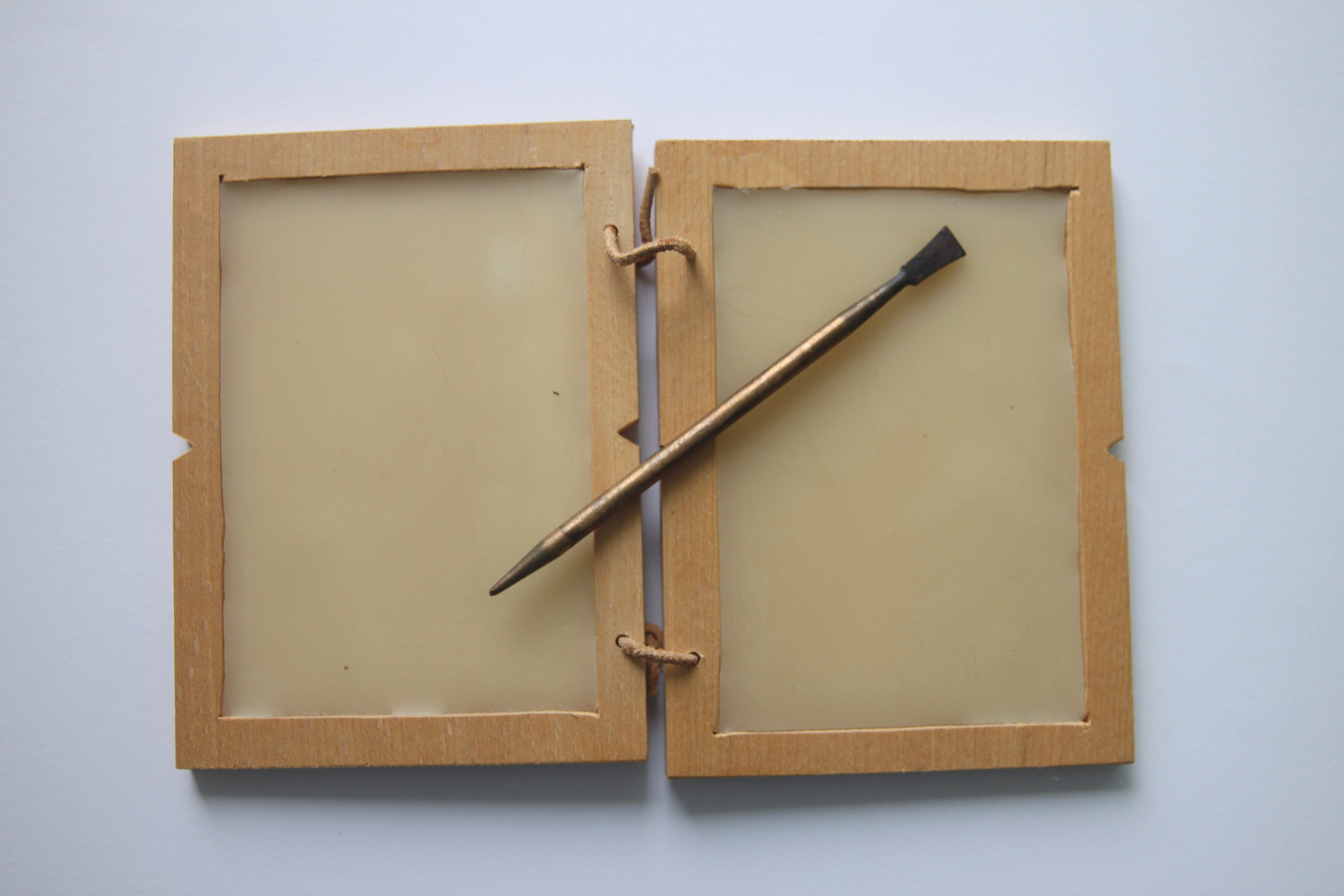

- The medium, not the message. An underlying substance or layer. The material on or from which an organism lives, grows or obtains its nourishment.

- A material which provides the surface on which something is deposited or inscribed. A waxen tablet, a punched card, a magnetic disc. A piece of paper.

Once upon a time the distinction between information and the substrate on which that information was conveyed was not apparent. The information in a letter, book, or newspaper was indistinguishable from the tangible paper on which it was printed.

But now.

(Sub-thread: is physically printed material “tangible” information? Or just a tangible substrate in which information is embedded?)

Substrate neutrality

In his fascinating book Darwqin’s Dangerous Idea that popularising philosopher Daniel Dennett used “substrate neutrality” to describe how cognitive processes and mental phenomena can be understood independently of the physical medium (“substrate”) in which they occur. What matters is their functional organisation, not what they are made out of. Thus intelligence is not necessarily a case of requiring carbon and a fleshy cerebellum, but may be built out of silicon (or in the case of MONIAC, a hydraulic system)

We should therefore focus on the functional and algorithmic aspects of cognition rather than the specific biological implementation. This is Darwin’s Dangerous Idea, in a nutshell. This view supports the possibility of artificial intelligence and consciousness in non-biological systems. That seemed a bit outlandish in the 1990s, but in 2024, less so.

Dennett uses this concept to argue for a computational theory of mind and against “mind-body dualism”.

Dennett's world rather took the information technology crowd by storm, and the computational nature of a brain isin my view is somewhat overplayed. JC calls this robomorphism. This is not to deny there is anything ineffable and sacred about carbon and fleshy cerebellar, but rather to suggest that a cheering machine style algorithmic model is simplistic and does not fully capture how human cognition and discourse works. You could build a generally intelligent machine, that is to say, but you would not start from a symbol-processing Turing machine if you were to do that.