Signal-to-noise ratio: Difference between revisions

Amwelladmin (talk | contribs) No edit summary |

Amwelladmin (talk | contribs) No edit summary |

||

| (15 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

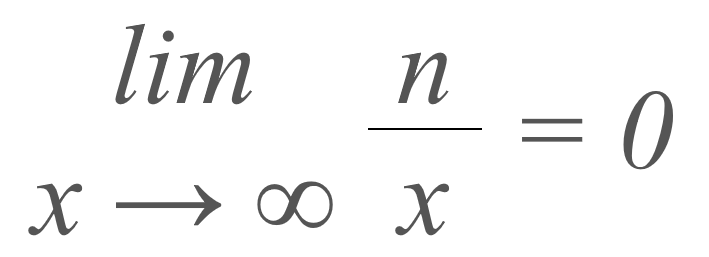

{{ | {{freeessay|systems|signal-to-noise ratio|{{image|Infinity|png|Where<br>“n” is the data in which you trust; and<br>“x” is the data you haven’t got yet.}}}} | ||

Latest revision as of 09:59, 18 July 2023

|

The JC’s amateur guide to systems theory™

The Jolly Contrarian holds forth™

Resources and Navigation

|

Caught in a mesh of living veins,

In cell of padded bone,

He loneliest is when he pretends

That he is not alone.

We’d free the incarcerate race of man

That such a doom endures

Could only you unlock my skull,

Or I creep into yours.

- —Ogden Nash, Listen..., reprinted in Candy is Dandy: The Best of Ogden Nash

In God we trust, all others must bring data.

- —Edwin R. Fisher

“Say you look at information on a yearly basis, or stock prices, or the fertilizer sales of your father-in-law’s factory in Vladivostok. Assume further that for what you are observing, oat a yearly frequency, the ratio of signal to noise is about one to one (half noise, half signal) — this means that about half the changes are real improvements or degradations, the other half come from randomness. This ratio is what you get from yearly observations. But if you look at the very same data on a daily basis, the composition would change to 95 percent noise, 5 percent signal. And if you observe the data on an hourly basis, as people immersed in the news and market price variations tend to, the split becomes 99.5 percent noise to 0.5 percent signal. That is two hundred times more noise than signal — which is why anyone who listens to news (except when very, very significant events take place) is one step below sucker.”

There is no data

If the information content of the universe, through all time and space is as good as infinite[1] and the data homo sapiens has collected to date is necessarily finite[2] (even counting what we’ve lost along the way), it follows that the total value of our data — in which Professor Fisher would have us trust — is, like any other finite number divided by infinity, mathematically nil.

And that is before you consider the quality of our data. If 90% of all gathered data originates from the internet age,[3] a good portion of our summed human knowledge comprises cat videos, self indulgent wikis and hot takes on Twitter — so is shite data, even on its own terms.[4]

In any case, it follows that, should we transcend our meagre hermeneutic bubbles, and free the incarcerate race of man, so to speak, the ratio of our data — good, bad, indifferent — to all possible data in the universe, past and future out there is infinitesimal.[5]

If this is what we’re meant to trust, you might ask what is so wrong with God. We are pattern-seeking machines. It’s not like we take the data as we find them, coolly fashioning objective axioms from them, carving nature at its joints: we bring our idiosyncratic prisms and pre-existing cognitive structures to the task —our own “hot takes” — and wantonly create patterns to support our pre-existing convictions.

Falsifications die

This is not a criticism as much as a piece of resignation: an observation. This is the doom our incarcerate race endures.

It is not just the Twitterati. Science, too, has its confirmation biases at a meta-level, uncontrollable even by double-blind testing methodologies. Experiments which confirm a hypothesis are a lot more likely to be published than those which don’t.[6] Of those failed experiments that are published, far fewer are cited in other literature. Falsifications die.

It happens in any, and every social structure: even woke ones. Whoever occupies a position of standing or influence in a power structure, wields the tremendous power to ignore ideas which don’t suit her own predilections. This isn’t mendacious, bad faith behaviour: a core operating condition of any successful paradigm is the filtration of data that doesn’t help the programme — “noise” and reinforce data which does — “signal”. As long as the power structure is not in crisis, “inconvenient”, “awkward”, “disruptive” and irrelevant suggestions are equally a distraction; they divert resources from the true path; they have the potential to upset the existing programme, and those who are conducting it. It is much easier to overlook the direction a new suggestion might take than engage energy, time and resources in a fight you might end up losing. We all do it. Ask Kodak: someone there invented the digital camera. Management looked the other way: it didn’t suit the path the firm was on.

Falsifications die.

This is neither a cause for alarm nor is it new. It is just a reminder how important, in all human discourse, is contingency, provisionality, and above all humility. Your data is likely bunk.

Problems are in the future

All of these are another way of attacking a familiar problem: the universe, the world, the nation, your market, your workplace and even your interpersonal relationships are complex, not just complicated. Mere complication is a function of a paradigm. It is part of the game. It is within the rules. It is soluble, by sufficiently skilled application of the rules. Complication can be beaten by an algorithm. You can brute force it.

Complexity, you cannot. Complexity describes the limits of the narrative. Complexity is the wilderness beyond the rules of the game. Complexity inhabits the noise, and the data we do not yet have, not the signal. Where there is complexity, algorithmic rules do not work. Here data is relegated to noise.[7]

Behold, the difference between complication and complexity: complication is from the past. It is known knowns and known unknowns: we can solve its problems with the information we already have, and derivations from it. But complexity is of the present and the future: it problems and opportunities which are currently unfolding, or which haven’t yet presented themselves.

This is why physical sciences apparently have a greater success than social sciences: they ask themselves easier questions: Physical sciences generally address behaviours of independent events — rolling balls, flipping coins, waves and/or particles of light. But rolling balls are not autonomous agents. They act independently. The behaviour of one will not influence that of another. Each coin flip is, as a condition of probability theory — independent.[8] Independent events may be modelled. That is to say, they may be complicated but they remain predictable, at least in theory. When physical systems inexplicably go bang — Chernobyl, the Shuttle Challenger, the Torrey Canyon — the root cause will not be a failure of the physical science underlying the engineering, but some supervening cause invalidating the underlying assumptions on which the physical science was based. Things go bang because of non-linear interactions.

Social sciences don’t have that get-out-of-jail-free card: they address precisely that kind of supervening cause: behaviour that is, intrinsically, unpredictable. Psychology, sociology, anthropology, economics — these concern themselves with human agents, who are influenced by each other — which is why we don’t use physical science to predict their behaviour. Social sciences have to deal with the inherently complex, non-Gaussian interactions between human beings.[9]

You can’t prove things that haven't happened yet with data

It is said that the NASA scientists who raised the fear that damage to the space shuttle Columbia on launch posed a risk of catastrophic loss the the spacecraft on re-entry were required to prove their hunch with data.

Leaving aside the difficulty with collecting any data about a the exterior of a vehicle travelling thousands of miles per hour in orbital space above the earth's atmosphere, let alone analyzing and drawing conclusions from it and the short period of time before it was due to come back to earth, note the profound logical fallacy with this request. At the point it was made, no shuttles had disintegrated on re-entry, notwithstanding that several head sustained minor damage on launch. There was, therefore, no data supported the scientists’ fear.

This did not mean the scientists were wrong, as the world observed to its horror on 1 February 2003.

Behaviourism and The Ghost in the Machine

Now it wasn’t always like that. Fifty years ago psychologists were waging a battle royale against the positivist branch of their own discipline, which insisted on on proceeding by reference, exclusively, to “public events” and ignoring private mental events. Can you imagine it: a psychology which ignores private mental events? Can you imagine an approach to artificially reconstructing natural intelligence which ignores private mental events?

On the strength of this doctrine, the Behaviorists proceeded to purge psychology of all intangibles and unapproachables. The terms ‘consciousness’, ‘mind’, ‘imagination’ and ‘purpose’, together with a score of others were declared to be unscientific, treated as dirty words, and banned from the vocabulary. ...

It was the first ideological purge of such a radical kind in the domain of scientists, predating the ideological purchase in totalitarian politics, but inspired by the same single-mindedness true fanatics.

—Arthur Koestler, The Ghost in the Machine

You might ask what has changed, for it seems that the contemporary interest in in neural networks, big data and natural language processing, all of which eschew the intentional fallacy, adopt exactly the Behaviourist disposition. Don’t they? On one hand, they have no choice: if human psychologists are struggling to understand how consciousness works in situ, in the actual mesh of living veins, in cell of padded bone, is it any wonder people looking at its proxy in a digital network might not bother?

See also

- Hindsight

- The Patterning Instinct: A Cultural History of Humanity’s Search for Meaning

- nomological machine

- Complexity

- Systems theory

- system redundancy

References

- ↑ This assumes there is not a finite end-point to the universe; by no means settled cosmology, but hardly a rash assumption. And given how little we have of it, the universe’s total information content might as well be infinite, when compared to our finite collection of mortal data. Even the total, ungathered-by-mortal-hand, information content generated by the whole universe to date, not even counting the unknowable future, is as good as infinite.

- ↑ There is no data from the future.

- ↑ Eric Schmidt said something like this in 2011, and it sounds totally made up, but let’s run with it, hey?

- ↑ Get off Twitter, okay? For all of our sakes.

- ↑ That means, really small.

- ↑ The Hidden Half: How the World Conceals its Secrets, by Michael Blastland.

- ↑ Provisional theory: “information” is data framed with a hypothesis.

- ↑ The technical term: “platykurtic”.

- ↑ physical sciences set up closed logical systems within which their rules will work, and often these systems are dramatically simplified as compared with anything you see in the real world: Newton, for example, assumes a frictionless, stationery, stable, neutral frame of reference: circumstances which, in any observed environment, do not and cannot not exist. Nancy Cartwright calls these structures “nomological machines”. Because of this explicit caveat, we can put any variances between Newton’s prediction and the observed outcome down not to falsification, but to the messy real world “contaminating” the idealised experimental conditions. Hence, the proverbial crisp packet blowing across St Mark’s Square.