Artificial intelligence: Difference between revisions

Amwelladmin (talk | contribs) |

Amwelladmin (talk | contribs) No edit summary Tags: Mobile edit Mobile web edit |

||

| (30 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

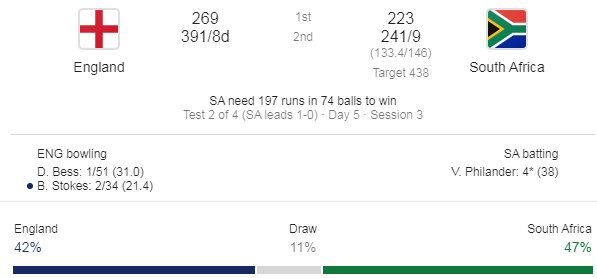

{{a|technology|{{image|Cricket prediction|png|''Why'' your job is safe: [[AI]] might whup [[Go]] grand-masters, but they don’t understand [[cricket]].}}}} | |||

{{Quote|“Look, good against remotes is one thing, good against the living, that’s something else.” | |||

:—Han Solo}} | |||

{{quote|The automatic pilot is not much help with hijackers. | |||

:—{{author|John Gall}}, {{br|Systemantics: The Systems Bible}}}}{{d|Artificial intelligence|/ɑːtɪˈfɪʃ(ə)l ɪnˈtɛlɪdʒ(ə)ns/|n|}} | |||

1. An [[algorithm]] which deduces that, since you bought sneakers on Amazon last week, carpet-bombing every cranny of your cyber-scape with advertisements for trainers you no longer need is an effective form of advertising. <br> | |||

2. The [[technology]] employed by [[social media]] platforms like [[LinkedIn]] to save you the bother of composing your own unctuous endorsements of people you once met at a [[business day convention]] and who have just [[share]]d the stimulating time they’ve had at a [[panel discussion]] on the operational challenges of regulatory reporting under the [[securities financing transactions regulation]], by composing an unctuous reply for you. “''So'' inspiring!” <br> | |||

3. '''[[Machines are fungible]]'''. <br> | |||

4. Oh help me we lawyers are all doomed because of ChatGPT-3 | |||

Stand by for an essay. But first, answer this: why do we insist on making things ''easy'' for the machines? We seem progressively to be expected to align ourselves to the affordances and capabilities of machines to ''appraise'' ourselves on how good we are at things we know machines excel at. And then we are surprised, and alarmed, when machines can pass the bar exam. | |||

==The ''practical'' reason [[why your job is safe]]== | |||

Is that, for all the wishful thinking ([[blockchain]]! [[chatbot|chatbots]]!) the “[[artificial intelligence]]” behind [[Reg tech|reg-tech]] at the moment just ''[[Reg tech can remain disappointing longer than you can remain animate|isn’t very good]]''. Oh, they’ll talk a great game about “[[neural network]]s”, “natural language parsing”, “tokenised [[distributed ledger technology]]” and so on, but only to obscure that what is going on behind the misty veil is little more than a sophisticated visual basic macro. | |||

''Actually'' parsing [[natural language]] and doing that contextual, experiential thing of knowing that, ''yadayadayda [[boilerplate]] but '''whoa''' hold on, tiger we’re not having '''that''''' isn’t the kind of thing a startup with a .php manual and a couple of web developers can develop on the fly. So expect [[proof of concept|proofs of concept]] that work on a pre-configured [[confidentiality agreement]] in the demo — ''everything'' looks good on a confi during the pitch<ref>You could almost make a JC [[maxim]] out of that, come to think of it.</ref> — but will be practically useless on the general weft and warp of the contracts you come across in real life — as prolix, unnecessary and randomly drafted as they are. | |||

=== | ===There ''is'' amazing [[AI]], but it’s not in [[financial services]] regulation=== | ||

The thing is, there is some genuinely staggering AI out there, but it ain’t in [[reg tech]] — it is in the music industry. The AI drummer on Apple’s ''Logic Pro''. Amp emulation. Izotope’s mastering plugins. These are genuinely amazing, and really are putting folks out of work/bringing professional studio technology in the hands of [[Dangerboy|talentless amateurs]] (delete as applicable). | |||

If only Izotope realised how much money there was in [[reg tech]], they wouldn’t be faffing around with hobbyist home recording types. Note, by the way, the sphere that musical AI operates in: the complicated and the simple. It is not required to interpret, [[Problem solving|problem-solve]], trouble-shoot or solutionise. It has a fully determined job and it does it, fantastically. | |||

===Infinite fidelity is impossible=== | Guess what: lawyers don’t operate in a fully determined environment. They ''have'' to [[Problem solving|problem-solve]], trouble-shoot and solutionise. That is ''all'' they have to do. | ||

There is a popular | ==The ''theoretical'' reason your job is safe== | ||

Strap in: we’re going [[Epistemology|epistemological]]. | |||

Evolution does not converge on a [[platonic ideal]]. It ''departs'' from an imperfect ''present''. It isn’t intelligent; it is a blind, random process reacting to [[unknown unknown]]s; it cannot anticipate the future where, as [[Criswell]] put it, “you and I are going to spend the rest of our lives”. Those [[unknown unknown]]s are random, [[complex]], non-linear and dynamic. What is a dominant factor today might not be tomorrow<ref>Just ask the inventors of, oh, the canal, the cassette, the mini-disc, the laserdisc, the super-audio cd, VHS, betamax, the mp3 player ...</ref>, and the interdependencies, foibles and illogicalities that interact to create that process are so overwhelmingly [[Complexity|complex]] that it is categorically impossible to predict. If you could, the pathway from a sharpened stick to the singularity would be dead straight, the development of technology would be a relentless process of refinement, all mysteries could be solved by [[algorithm]], and ''we would be there by now''. | |||

BUT THAT’S NOT AT ALL HOW IT WORKS. | |||

Evolutionary [[design space]] is four-dimensional. If you were to regard it from a dispassionate, stationary frame of reference — you know, a [[2001: A Space Odyssey|magnetic anomaly planted on the moon]] or something, you would see technological progress hurtling chaotically around the room like a deflating balloon. | |||

From our position, ''on'' that balloon, the parts of [[design space]] we leave behind seem geometrically more stupid the further away we get. But we are not travelling in a straight line. | |||

Bear in mind also that an artefact’s value is in large part a function of its cost of production. Absent a monopolistic impulse, that is the ''ceiling'' on its value. The moment you can digitise and automate something costlessly, its ''value'' drops to zero. No-one will pay you to do this for them ''because they can do it for themselves''. This is just one of those unpredictable illogicalities that randomises the trajectory of evolutionary design. | |||

Proposition therefore: ''value depends on the cost and difficulty of production''. | |||

If you successfully automate a complicated operation, the ''value'' of carrying out that operation drops proportionately, so to wring any value out of it you need to make it ''more'' complex. Note the complexity resides not inside that automated component, but in how that automated component interacts with the other components. By removing some cost and uncertainty, you’ve added more at a more abstract scale. The [[subject matter expert]]ise required to understand that wider, systematic complexity is far greater than was required to manage that component pre-automation (by definition, right? you just automated it with a machine that does it for free. How hard can it be?). | |||

==The actual reason [[why your job is safe]]== | |||

More particularly, why [[artificial intelligence]] won’t be sounding the death knell to the [[Legal eagle|legal profession]] any time soon. Because Computer language isn’t nearly as rich as human language. It doesn’t have any tenses, for one thing. In this spurious fellow’s opinion tenses, narratising as they do a spatio-temporal continuity of existence that we have known since the time of [[David Hume]] cannot be deduced or otherwise justified on logical grounds, is the special sauce of consciousness, self-awareness, and therefore intelligence. If you don’t have a conception of your self as a unitary, thinking thing, though the past, at present and into the future, then you have no need to plan for the future or learn lessons from the past. You can’t narratise. | |||

Machine language deals with past (and future) events without using tenses. All code is rendered in the present tense: Instead of saying: | |||

:''The computer’s configuration on May 1, 2012 '''''was''''' XYZ''<br> | |||

Machine language will typically say: | |||

:''Where <DATE<sub>x</sub>> equals “May 1 2012”, let <CONFIGURATION<sub>x</sub>> equal “XYZ”''<br> | |||

This way a computer does not need to conceptualise ''itself yesterday'' as something different to ''itself today'', which means it doesn’t need to conceptualise “itself” ''at all''. Therefore, computers don’t need to be self-aware. Unless computer syntax undergoes some dramatic revolution (it could happen: we have to assume human language went through that revolution at some stage) computers will never be self-aware. | |||

====It can’t handle ambiguity==== | |||

Computer language is designed to allow machines to follow algorithms flawlessly. It needs to be deterministic — a given proposition must generate a unique binary operation with no ambiguity — and it can’t allow any ''variability'' in interpretation. This makes it different from a natural language, which is shot through with both ambiguity and variability. Synonyms. [[Metaphor|Metaphors]]. Figurative language. All of these are incompatible with code, but utterly fundamental to natural language. | |||

*It is very hard for a machine language to handle things like “reasonably necessary” or “[[best endeavours]]”. | |||

*Coding for the sort of redundancy which is rife in English (especially in legal English, which rejoices in [[triplet|triplets]] like “give, devise and bequeath”) dramatically increases the complexity of any [[algorithm|algorithms]]. | |||

*Aside from redundancy there are many meanings which are almost, but not entirely, the same, which must be coded for separately. This increases the load on the dictionary and the cost of maintenance. | |||

====The ground rules cannot change==== | |||

The logic and grammar of machine language and the assigned meaning of expressions is profoundly static. The corollary of the narrow and technical purpose for which machine language is used is its inflexibility: ''Machines fail to deal with unanticipated change''. | |||

====Infinite fidelity is impossible==== | |||

There is a popular “[[reductionism|reductionist]]” movement at the moment which seeks to atomise concepts with a view to untangling bundled concepts. The belief is that by separating bundles into their elemental parts you can ultimately dispel all ambiguity. A similar attitude influences contemporary markets regulation. This programme aspires to ultimate certainty; a single set of axioms from which all propositions can be derived. From this perspective shortcomings in machine understanding of legal information are purely a function of a lack of sufficient detail the surmounting of which is a matter of time, given the collaborative power of the worldwide internet. The singularity is near: look at the incredible strides made in natural language processing (Google translate), self-driving cars, computers beating grand masters at Chess and Go. | |||

But you can split these into two categories: those which are the product of obvious (however impressive) computational feats - like Chess, Go, Self-driving cars, and those that are the product of statistical analysis, so are rendered as matters of probability (like Google translate). | But you can split these into two categories: those which are the product of obvious (however impressive) computational feats - like Chess, Go, Self-driving cars, and those that are the product of statistical analysis, so are rendered as matters of probability (like Google translate). | ||

If their continued existence depended on its Chess-playing we might commend our souls to the hands of a computer (well - I would). It | If their continued existence depended on its Chess-playing we might commend our souls to the hands of a computer (well - I would). It won’t be long before we do a similar thing by getting into an AI-controlled self-driving car - we give ourselves absolutely over to the machine and let it make decisions which, if wrong, may kill us. But its range of actions are limited and the possible outcomes it must follow are obviously circumscribed - a single slim volume can comprehensively describe the rules with which it must comply (the Highway Code). Outside machine failure, the main risk we run is presented not by non-machines (folks like you and me) behaving outside the norms the machine has been programmed to expect. I think we'd be less inclined to trust a translation. | ||

*there is an inherent ambiguity in language (which legal drafting is designed to minimize, but which it | *there is an inherent ambiguity in language (which legal drafting is designed to minimize, but which it can’t eliminated. | ||

{{sa}} | |||

*[[LinkedIn]] | |||

*The [[Singularity]] which may or may not<ref>Spoiler: ''Is'' not.</ref> be [[The Singularity is Near - Book Review|near]] | |||

*{{aiprov|Rumours of our demise are greatly exaggerated}} | |||

*{{aiprov|On machine code and natural language}} | |||

{{ref}} | |||

{{c|Technology}} | |||

Latest revision as of 21:09, 1 January 2024

|

JC pontificates about technology

An occasional series.

|

“Look, good against remotes is one thing, good against the living, that’s something else.”

- —Han Solo

The automatic pilot is not much help with hijackers.

Artificial intelligence

/ɑːtɪˈfɪʃ(ə)l ɪnˈtɛlɪdʒ(ə)ns/ (n.)

1. An algorithm which deduces that, since you bought sneakers on Amazon last week, carpet-bombing every cranny of your cyber-scape with advertisements for trainers you no longer need is an effective form of advertising.

2. The technology employed by social media platforms like LinkedIn to save you the bother of composing your own unctuous endorsements of people you once met at a business day convention and who have just shared the stimulating time they’ve had at a panel discussion on the operational challenges of regulatory reporting under the securities financing transactions regulation, by composing an unctuous reply for you. “So inspiring!”

3. Machines are fungible.

4. Oh help me we lawyers are all doomed because of ChatGPT-3

Stand by for an essay. But first, answer this: why do we insist on making things easy for the machines? We seem progressively to be expected to align ourselves to the affordances and capabilities of machines to appraise ourselves on how good we are at things we know machines excel at. And then we are surprised, and alarmed, when machines can pass the bar exam.

The practical reason why your job is safe

Is that, for all the wishful thinking (blockchain! chatbots!) the “artificial intelligence” behind reg-tech at the moment just isn’t very good. Oh, they’ll talk a great game about “neural networks”, “natural language parsing”, “tokenised distributed ledger technology” and so on, but only to obscure that what is going on behind the misty veil is little more than a sophisticated visual basic macro.

Actually parsing natural language and doing that contextual, experiential thing of knowing that, yadayadayda boilerplate but whoa hold on, tiger we’re not having that isn’t the kind of thing a startup with a .php manual and a couple of web developers can develop on the fly. So expect proofs of concept that work on a pre-configured confidentiality agreement in the demo — everything looks good on a confi during the pitch[1] — but will be practically useless on the general weft and warp of the contracts you come across in real life — as prolix, unnecessary and randomly drafted as they are.

There is amazing AI, but it’s not in financial services regulation

The thing is, there is some genuinely staggering AI out there, but it ain’t in reg tech — it is in the music industry. The AI drummer on Apple’s Logic Pro. Amp emulation. Izotope’s mastering plugins. These are genuinely amazing, and really are putting folks out of work/bringing professional studio technology in the hands of talentless amateurs (delete as applicable).

If only Izotope realised how much money there was in reg tech, they wouldn’t be faffing around with hobbyist home recording types. Note, by the way, the sphere that musical AI operates in: the complicated and the simple. It is not required to interpret, problem-solve, trouble-shoot or solutionise. It has a fully determined job and it does it, fantastically.

Guess what: lawyers don’t operate in a fully determined environment. They have to problem-solve, trouble-shoot and solutionise. That is all they have to do.

The theoretical reason your job is safe

Strap in: we’re going epistemological.

Evolution does not converge on a platonic ideal. It departs from an imperfect present. It isn’t intelligent; it is a blind, random process reacting to unknown unknowns; it cannot anticipate the future where, as Criswell put it, “you and I are going to spend the rest of our lives”. Those unknown unknowns are random, complex, non-linear and dynamic. What is a dominant factor today might not be tomorrow[2], and the interdependencies, foibles and illogicalities that interact to create that process are so overwhelmingly complex that it is categorically impossible to predict. If you could, the pathway from a sharpened stick to the singularity would be dead straight, the development of technology would be a relentless process of refinement, all mysteries could be solved by algorithm, and we would be there by now.

BUT THAT’S NOT AT ALL HOW IT WORKS.

Evolutionary design space is four-dimensional. If you were to regard it from a dispassionate, stationary frame of reference — you know, a magnetic anomaly planted on the moon or something, you would see technological progress hurtling chaotically around the room like a deflating balloon.

From our position, on that balloon, the parts of design space we leave behind seem geometrically more stupid the further away we get. But we are not travelling in a straight line.

Bear in mind also that an artefact’s value is in large part a function of its cost of production. Absent a monopolistic impulse, that is the ceiling on its value. The moment you can digitise and automate something costlessly, its value drops to zero. No-one will pay you to do this for them because they can do it for themselves. This is just one of those unpredictable illogicalities that randomises the trajectory of evolutionary design.

Proposition therefore: value depends on the cost and difficulty of production.

If you successfully automate a complicated operation, the value of carrying out that operation drops proportionately, so to wring any value out of it you need to make it more complex. Note the complexity resides not inside that automated component, but in how that automated component interacts with the other components. By removing some cost and uncertainty, you’ve added more at a more abstract scale. The subject matter expertise required to understand that wider, systematic complexity is far greater than was required to manage that component pre-automation (by definition, right? you just automated it with a machine that does it for free. How hard can it be?).

The actual reason why your job is safe

More particularly, why artificial intelligence won’t be sounding the death knell to the legal profession any time soon. Because Computer language isn’t nearly as rich as human language. It doesn’t have any tenses, for one thing. In this spurious fellow’s opinion tenses, narratising as they do a spatio-temporal continuity of existence that we have known since the time of David Hume cannot be deduced or otherwise justified on logical grounds, is the special sauce of consciousness, self-awareness, and therefore intelligence. If you don’t have a conception of your self as a unitary, thinking thing, though the past, at present and into the future, then you have no need to plan for the future or learn lessons from the past. You can’t narratise.

Machine language deals with past (and future) events without using tenses. All code is rendered in the present tense: Instead of saying:

- The computer’s configuration on May 1, 2012 was XYZ

Machine language will typically say:

- Where <DATEx> equals “May 1 2012”, let <CONFIGURATIONx> equal “XYZ”

This way a computer does not need to conceptualise itself yesterday as something different to itself today, which means it doesn’t need to conceptualise “itself” at all. Therefore, computers don’t need to be self-aware. Unless computer syntax undergoes some dramatic revolution (it could happen: we have to assume human language went through that revolution at some stage) computers will never be self-aware.

It can’t handle ambiguity

Computer language is designed to allow machines to follow algorithms flawlessly. It needs to be deterministic — a given proposition must generate a unique binary operation with no ambiguity — and it can’t allow any variability in interpretation. This makes it different from a natural language, which is shot through with both ambiguity and variability. Synonyms. Metaphors. Figurative language. All of these are incompatible with code, but utterly fundamental to natural language.

- It is very hard for a machine language to handle things like “reasonably necessary” or “best endeavours”.

- Coding for the sort of redundancy which is rife in English (especially in legal English, which rejoices in triplets like “give, devise and bequeath”) dramatically increases the complexity of any algorithms.

- Aside from redundancy there are many meanings which are almost, but not entirely, the same, which must be coded for separately. This increases the load on the dictionary and the cost of maintenance.

The ground rules cannot change

The logic and grammar of machine language and the assigned meaning of expressions is profoundly static. The corollary of the narrow and technical purpose for which machine language is used is its inflexibility: Machines fail to deal with unanticipated change.

Infinite fidelity is impossible

There is a popular “reductionist” movement at the moment which seeks to atomise concepts with a view to untangling bundled concepts. The belief is that by separating bundles into their elemental parts you can ultimately dispel all ambiguity. A similar attitude influences contemporary markets regulation. This programme aspires to ultimate certainty; a single set of axioms from which all propositions can be derived. From this perspective shortcomings in machine understanding of legal information are purely a function of a lack of sufficient detail the surmounting of which is a matter of time, given the collaborative power of the worldwide internet. The singularity is near: look at the incredible strides made in natural language processing (Google translate), self-driving cars, computers beating grand masters at Chess and Go.

But you can split these into two categories: those which are the product of obvious (however impressive) computational feats - like Chess, Go, Self-driving cars, and those that are the product of statistical analysis, so are rendered as matters of probability (like Google translate).

If their continued existence depended on its Chess-playing we might commend our souls to the hands of a computer (well - I would). It won’t be long before we do a similar thing by getting into an AI-controlled self-driving car - we give ourselves absolutely over to the machine and let it make decisions which, if wrong, may kill us. But its range of actions are limited and the possible outcomes it must follow are obviously circumscribed - a single slim volume can comprehensively describe the rules with which it must comply (the Highway Code). Outside machine failure, the main risk we run is presented not by non-machines (folks like you and me) behaving outside the norms the machine has been programmed to expect. I think we'd be less inclined to trust a translation.

- there is an inherent ambiguity in language (which legal drafting is designed to minimize, but which it can’t eliminated.

See also

- The Singularity which may or may not[3] be near

- Rumours of our demise are greatly exaggerated

- On machine code and natural language